Using Python to implement proxy IP configuration and management in web data crawling

Using proxy IP is a common technical means when performing web data crawling (Web Scraping). Proxy IP can help you avoid being blocked by the target website and improve the efficiency of crawling. In this article, we will introduce how to use Python to implement proxy IP configuration and management in web data crawling.

1. Why do you need proxy IP?

When performing large-scale data crawling, websites usually limit frequent requests from the same IP address to prevent excessive crawling. Such restrictions may include:

IP blocking: If the same IP address sends too many requests, it may be blocked by the target website.

Speed limit: Limit the number of requests per IP address per unit time.

Verification code: Trigger the verification code verification mechanism to prevent automated crawling.

Using proxy IP can help disperse request traffic, reduce the risk of being blocked, and improve crawling efficiency.

2. Types of proxy IP

Proxy IP can be roughly divided into the following categories:

Free proxy: public proxy IP, usually with poor stability and security.

Paid proxy: IP provided by commercial proxy services, usually provides higher stability and security.

3. Proxy IP Configuration in Python

Using proxy IP for web data crawling in Python can usually be achieved by following the steps below:

3.1 Install necessary libraries

First, we need to install the requests library, which is a simple and easy-to-use HTTP request library. You can install it with the following command:

3.2 Configure proxy IP

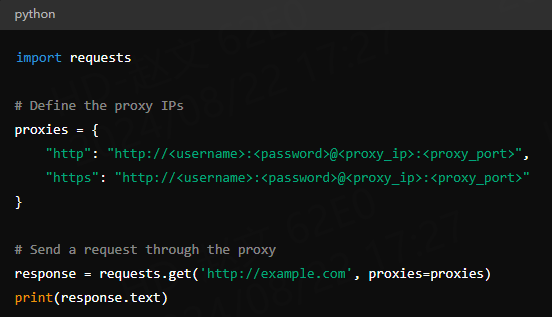

We can use proxy IP by setting the proxies parameter in the requests library. Here is a simple example

In this example, username and password are the authentication information provided by the proxy service provider (if necessary), and proxy_ip and proxy_port are the IP address and port number of the proxy server.

3.3 Handle proxy IP pool

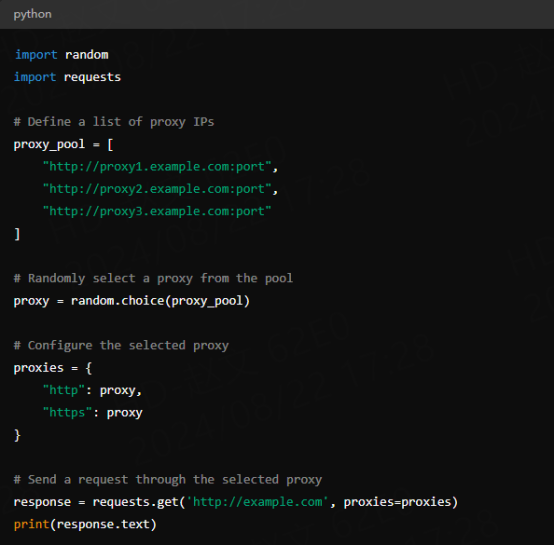

In order to improve the efficiency of crawling, we can use a proxy IP pool to automatically manage and rotate proxy IPs. The following is a simple example showing how to select a proxy IP from a proxy IP pool:

3.4 Handle proxy IP exceptions

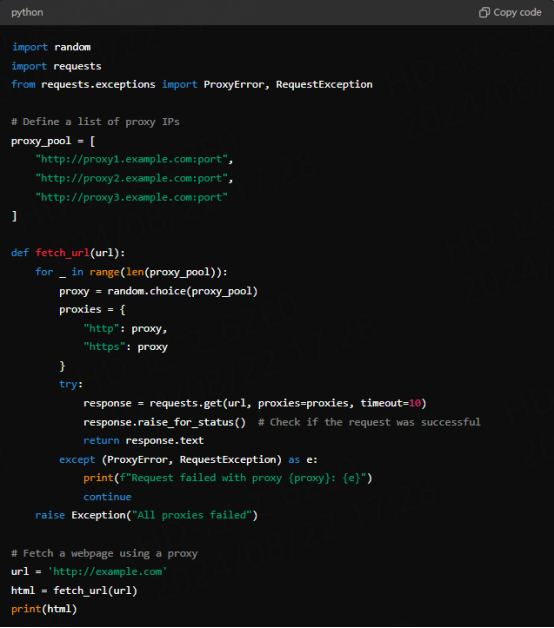

In actual applications, proxy IPs may become invalid or blocked. We can handle these problems by catching exceptions and reselecting proxy IPs:

4. Best practices for using proxy IPs

Rotate proxy IPs: Change proxy IPs regularly to avoid long-term use of the same IP and resulting in a ban.

Use high-quality proxies: Choose stable and reliable proxy services and avoid using free public proxies.

Set request intervals: Control the frequency of requests to avoid excessive pressure on the target website.

5. Summary

Using proxy IPs is an important technical means for web data crawling. By properly configuring and managing proxy IPs, you can effectively improve crawling efficiency and reduce the risk of being blocked. This article introduces how to configure and manage proxy IPs in Python.