How to use scraping proxy tools to improve LinkedIn data collection

LinkedIn is a valuable business data source with millions of professional profiles, corporate information and market insights. However, due to its strict anti-crawling mechanism, it becomes extremely challenging to directly scrape LinkedIn data. Therefore, using scraping proxy tools is the key to solving this problem. This article will introduce in detail how to improve the effect of LinkedIn data collection through proxy tools to ensure efficient and secure data acquisition.

1. Why do you need a proxy tool to scrape LinkedIn data?

LinkedIn has strict restrictions on data scraping. Through its anti-crawling mechanism, it can effectively identify and limit a large number of requests, resulting in IP addresses being blocked or restricted. At this time, the scraping proxy tool can help solve the following problems:

Avoid IP blocking: The proxy tool can rotate the IP address so that each request comes from a different IP, thereby avoiding the risk of blocking.

Improve data capture speed: Using multiple proxy IPs to capture data in parallel can greatly improve data collection efficiency.

Cross-regional data collection: Some data on LinkedIn is displayed differently depending on the region. By using proxy IPs, you can easily break through geographical restrictions and obtain data worldwide.

In general, proxy tools play an indispensable role in LinkedIn data collection, helping you break through the technical barriers of the platform.

2. Choose the right crawling proxy tool: proxy IP and proxy server

When collecting LinkedIn data, it is crucial to choose the right crawling proxy tool. Here are two main types of proxy tools and their usage scenarios:

Residential proxy

Residential proxies provide real home IP addresses, so they are regarded as ordinary user traffic by websites such as LinkedIn. They are highly anonymous and low-risk, but they may be slow and costly.

Applicable scenarios: Suitable for long-term data capture that requires high concealment and security, especially when collecting sensitive information, such as user profiles, company details, etc.

Data center proxy

Data center proxy IPs are virtual IP addresses provided by servers. They are cheap and fast, suitable for large-scale, high-speed data collection. However, they are relatively easy to detect and block.

Applicable scenarios: Suitable for large-scale, short-term use when a large amount of data is required, such as collecting non-sensitive data such as company lists and job information.

Tips: In order to increase the success rate, it is usually recommended to use both residential proxies and data center proxies to ensure a balance between security and speed.

3. How to configure crawling proxy in LinkedIn data collection

Configure proxy IP

When using crawling proxy tools, the most critical step is to correctly configure the proxy IP to ensure that data collection tools (such as Puppeteer, Scrapy, etc.) can send requests through the proxy. The following are general steps to configure the proxy:

Get the proxy IP: Select a suitable proxy service provider to obtain the available proxy IP address and port.

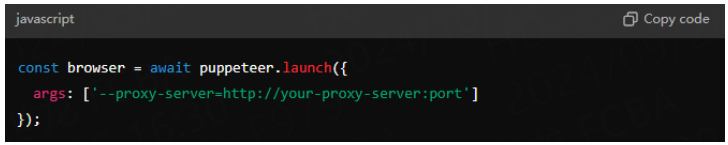

Set up the proxy: Configure the proxy settings in the data collection tool. For example, Puppeteer can set the proxy through the args parameter:

Test the connection: After starting the proxy, test whether you can successfully access LinkedIn to ensure that the proxy settings are correct.

Dealing with CAPTCHA issues: When crawling LinkedIn data, encountering CAPTCHA verification is a common problem. To avoid frequent verification, you can use a combination of proxy IP and automated CAPTCHA solving tools.

Proxy IP rotation and management

In order to further improve the crawling efficiency, it is necessary to use proxy IP rotation technology. By rotating different proxy IPs, requests can be dispersed and the risk of being blocked can be reduced.

Timed IP rotation: By setting the IP rotation frequency, ensure that the usage time of each proxy IP does not exceed the limit threshold of LinkedIn.

Disperse the request volume: Avoid letting a single IP send too many requests and keep the request behavior close to the access habits of real users.

Automated proxy management: With the help of proxy management tools (such as Luminati, Lunaproxy, etc.), the allocation and rotation of IPs can be automatically processed to improve operational efficiency.

4. Solve common problems in LinkedIn data crawling

Even if the proxy tool is configured, some common problems may still be encountered when crawling LinkedIn data. Here are some suggestions for solving these problems:

Proxy IP is blocked

When a proxy IP is used to crawl a large amount of data, it may cause the IP to be blocked. To avoid this, the following measures can be taken:

Reduce the request frequency: appropriately slow down the crawling speed to simulate the access behavior of real users.

Increase the IP rotation frequency: ensure that the usage time and request volume of each proxy IP are limited.

Use residential proxies: Although residential proxies are more expensive, they have a lower risk of being banned.

Frequent CAPTCHA verification

LinkedIn uses CAPTCHA to block a large number of automated requests. If you encounter this problem frequently, you can:

Use more advanced proxies: For example, combine residential proxies with automated CAPTCHA decryption tools.

Simulate user behavior: Add random clicks, scrolling and other actions during the crawling process to reduce being identified as a robot.

Conclusion

Using crawling proxy tools is the core method to improve LinkedIn's data collection efficiency. By configuring appropriate proxy IPs, rotating different types of proxies, and managing request strategies reasonably, you can effectively circumvent LinkedIn's anti-crawling mechanism and ensure that the required data is obtained safely and efficiently. Whether it is cross-regional collection or large-scale crawling, proxy tools can provide strong support for your data collection process.