How to use curl command combined with dynamic proxy to efficiently obtain data

When conducting large-scale data collection, simply using a fixed IP often faces frequent anti-crawler measures, such as IP blocking, request restrictions, etc. Through dynamic proxy technology, combined with curl command, these restrictions can be effectively avoided, greatly improving the success rate and efficiency of data collection. The following will explain in detail how to configure dynamic proxy in curl command step by step, and introduce relevant practical skills.

I. Understand the basic concepts of dynamic proxy and curl command

Before in-depth configuration, first understand the concepts of dynamic proxy and curl command.

What is dynamic proxy?

Dynamic proxy is a method of accessing the network by frequently switching IP addresses. Unlike static proxy, dynamic proxy will constantly change IP, usually automatically updated after a period of time. This feature of frequent IP changes can effectively avoid the anti-crawler mechanism of the target website, making the collection process smoother.

The role of curl command

curl is a command line tool widely used for data request and file transfer. The curl command can send HTTP requests to the specified URL and obtain data from the target address. In network data collection, curl can be used in conjunction with dynamic proxy to achieve efficient data capture.

II. Configure dynamic proxy to combine with curl command

To achieve the cooperation between dynamic proxy and curl command, you first need to prepare the resources of the proxy server. The following are the specific steps.

1. Select dynamic proxy service

Many proxy service providers support dynamic proxy services. Choosing a suitable service provider is the key to ensuring the quality of data collection. When purchasing dynamic proxy services, it is recommended to give priority to the following factors:

Stability: Whether the proxy connection is stable to avoid frequent disconnection.

IP switching frequency: Different service providers support different IP change frequencies, which can be selected according to needs.

Geographic location: If the target data collected is sensitive to regionality, it is more appropriate to choose an agent with regional coverage.

2. Get the IP and port of the proxy server

After selecting a proxy service provider and completing registration, you usually get a proxy IP pool, which contains a large number of IP addresses and corresponding port numbers. Record this information, which will be used to configure the proxy settings of the curl command later.

3. Configure the curl command to use a dynamic proxy

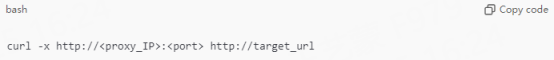

When using the curl command to request data, you can set the proxy through the -x option in the following format:

For example, assuming the proxy IP is 192.168.1.100, the port is 8080, and the request URL is http://example.com, the command is as follows:

To configure scripts for multiple proxies in a dynamic proxy pool, you can write multiple proxy IPs into the script and change the proxy IPs regularly.

III. Create a dynamic proxy automatic switching script

In large-scale data collection, manually switching proxy IPs is cumbersome and inefficient. Through Shell scripts, you can automatically switch proxy IPs in the IP pool to improve collection efficiency.

1. Create a proxy IP list file

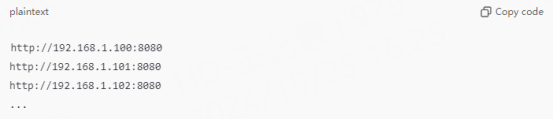

Save the proxy IP and port to a file, such as proxies.txt:

2. Write an automatic switching script

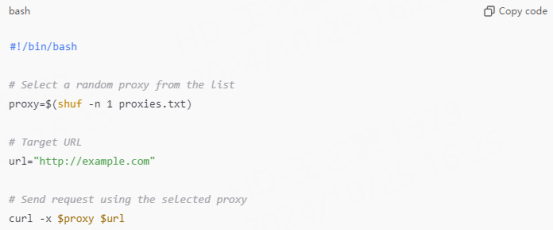

The following is a simple Shell script example for randomly selecting a proxy from proxies.txt and sending a request through curl:

In this script, shuf -n 1 proxies.txt randomly selects a proxy address from proxies.txt and uses that proxy for curl requests. The proxy will be changed every time the script is executed, thus achieving a dynamic proxy effect.

3. Scheduled script running

You can use Linux's cron scheduled task to let the script run automatically at a certain time interval. Edit the cron task:

Add the following line to run it every 10 minutes:

IV. Tips for improving data collection efficiency

Combining dynamic proxy and curl command can significantly improve collection efficiency, but to achieve the best results, you need to pay attention to the following tips.

1. Control request frequency

Even if dynamic proxy is used, excessive request frequency may still alert the target website and cause collection failure. It is recommended to control the request frequency according to website restrictions and proxy performance, and add appropriate delays.

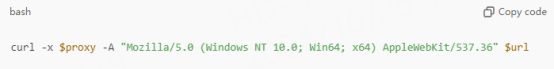

2. Randomize request header information

Many anti-crawler mechanisms identify crawler traffic based on request header information. By randomizing the request header information in each request, the camouflage effect can be effectively improved. For example:

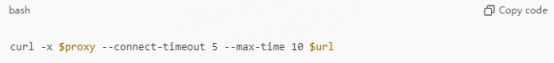

3. Set timeout parameters

If the proxy server responds too slowly, you can set the timeout time through the --connect-timeout and --max-time parameters of the curl command. For example:

The above command sets the connection timeout to 5 seconds and the total request time to 10 seconds to ensure collection efficiency.

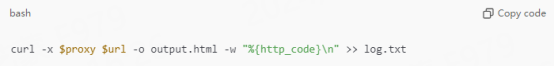

4. Use logs to record collection status

In data collection, it is very important to record the status of each collection so that problems can be discovered in a timely manner. Information such as request status and response code can be output to the log file:

Summary

By combining the curl command with a dynamic proxy, the success rate of data collection can be effectively improved and the risk of being banned can be reduced. This method is suitable for scenarios that require high-frequency, cross-regional collection. During use, by writing scripts to achieve automatic proxy switching, control collection frequency, randomize request headers, etc., the collection efficiency can be further optimized.