Node.js and Proxy IP: Best Practices for Building Efficient Crawler

In the context of data-driven decision-making and market analysis, crawlers are widely used and important. However, websites usually take various measures to limit the access of crawlers, such as IP-based access frequency restrictions, banning specific IPs, etc. In order to bypass these restrictions, proxy IP becomes a key tool for building efficient crawlers. Combining the powerful asynchronous capabilities of Node.js and the anonymity of proxy IP, an efficient web crawler can be built to ensure the success rate of data collection.

1. What is a proxy IP?

Proxy IP refers to the technology of using a proxy server to replace the user's real IP address to make network requests. Simply put, when you use a proxy IP for network access, the target server will only see the address of the proxy IP, and will not display your real IP. Proxy IP can help you bypass various network restrictions, hide your identity, and even access geographically restricted content.

The main advantages of proxy IP:

Improve privacy: Hide the real IP address to avoid being tracked or blocked by the website.

Bypass IP blocking: When the target website blocks a specific IP, the proxy IP can enable the crawler to bypass these blocks.

Disperse request traffic: By rotating multiple proxy IPs, excessive requests to a single IP can be avoided to cause blocking.

Access geographically restricted content: Proxy IP can help crawlers obtain cross-regional data, which is particularly suitable for market analysis and competitive intelligence collection.

2. Introduction to Web Crawler in Node.js

Node.js has become an ideal tool for developing web crawlers due to its efficient asynchronous processing capabilities and rich library support. Unlike traditional synchronous programming languages, Node.js can initiate a large number of HTTP requests without blocking the main thread, thereby improving the performance of the crawler.

Commonly used web crawler libraries in Node.js are:

axios: A Promise-based HTTP client that supports simple GET and POST requests.

request-promise: A lightweight and powerful HTTP request library. Although it is no longer maintained, it is still widely used in existing crawler projects.

puppeteer: A library for controlling Chrome or Chromium browsers, suitable for crawling dynamically rendered websites.

cheerio: A lightweight library, similar to jQuery, that can quickly parse and process HTML documents.

3. How to use proxy IP in Node.js

When building an efficient crawler, using proxy IP can effectively bypass the access restrictions of the website. Next, we will show how to combine proxy IP in Node.js to improve the efficiency of the crawler.

Step 1: Install required dependencies

First, you need to install several necessary libraries in the Node.js project:

axios: used to send HTTP requests.

tunnel: supports sending requests through a proxy server.

cheerio: parses and processes HTML responses.

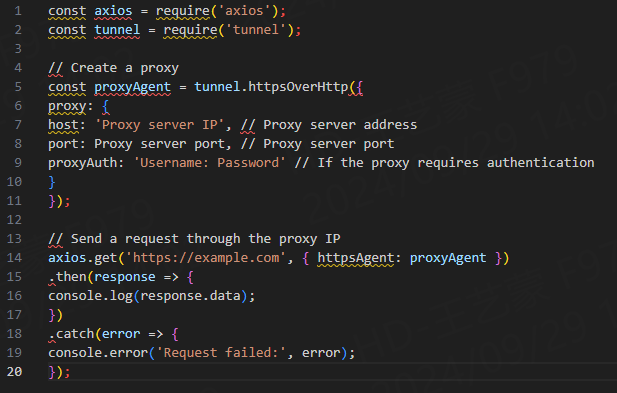

Step 2: Configure proxy IP

When we use proxy IP, we need to send requests through the proxy server through the request library. Here is a simple example of using axios with proxy IP:

In this example, the tunnel library is used to create a proxy channel and make network requests through the proxy IP. You can use different proxy IPs to test the effect of the crawler, thereby increasing the success rate.

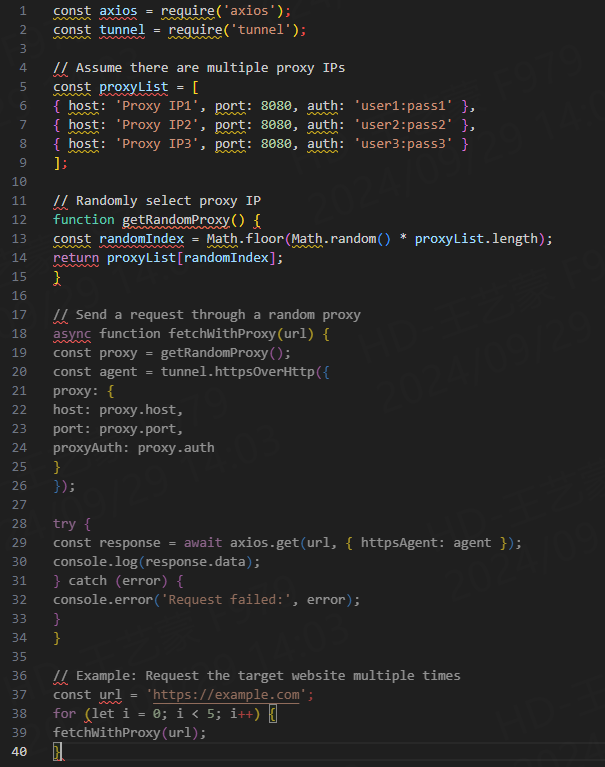

4. How to implement IP rotation

In actual crawler scenarios, a single proxy IP is easily blocked. Therefore, rotating proxy IPs is an effective way to improve the stability of the crawler. By using different proxy IPs for each request, the probability of being blocked by the target website can be greatly reduced.

Below we show how to implement IP rotation in Node.js:

This example shows how to randomly select a proxy from a list of multiple proxy IPs and use the proxy IP to send a request. In this way, the crawler can continue to work for a long time without being blocked.

5. Precautions when using proxy IPs

Although proxy IPs can significantly improve the efficiency of crawlers, in actual applications, the following points should still be noted:

The quality of proxy IPs: High-quality proxy IPs provide more stable connection speeds and higher anonymity. Poor-quality proxy IPs may cause frequent disconnection or be identified by websites.

Use a proxy pool: Relying on a single or a small number of proxy IPs cannot effectively prevent blocking. It is best to use a professional proxy pool service and rotate IPs regularly.

Avoid too frequent requests: Even if a proxy IP is used, too frequent visits may cause the target website to take more protective measures. Setting a reasonable request interval (such as sleeping for a few seconds between each request) can reduce the risk of being blocked.

Comply with the website's robots.txt: act within the ethical and legal boundaries of web crawlers and respect the crawling rules of the target website.

VI. Conclusion

Combining Node.js and proxy IP, building efficient web crawlers has become the best practice for breaking through website restrictions and obtaining large-scale data. By using proxy IP, crawlers can achieve IP rotation, reduce the risk of being blocked, and increase the success rate of data collection. The powerful asynchronous processing capabilities of Node.js combined with the flexible application of proxy IP allow developers to easily build a crawler system with high efficiency and high anonymity.

In practical applications, in addition to mastering the technology, it is also necessary to pay attention to complying with the ethical standards of web crawlers to ensure that data is obtained within the legal scope.