How to make Selenium run more covertly: practical methods to prevent detection by websites

Selenium is a powerful automated testing tool. Because it can be used to handle JavaScript rendering, Ajax requests, and complex user interactions, it is also widely used in crawlers and data capture. However, when using Selenium for automated operations or crawlers, sometimes it is detected and blocked by the target website. Next, this article will explore how websites detect Selenium and provide several common solutions.

Ways for websites to detect Selenium

First, before giving specific solutions, let's understand the common detection angles and their implementation principles.

1. Window.navigator.webdriver property detection

The window.navigator.webdriver property is set by the browser driver (such as Selenium WebDriver) in the navigator subobject of the browser's global window object. It has three values:

true means "the current browser is being controlled by an automated tool"

false means "the current browser is not being controlled by an automated tool"

undefined means "no information is available to indicate whether the browser is being controlled by an automated tool"

When using Selenium WebDriver to start the browser, WebDriver will set the value of this property to true by default so that the website can detect the automated session through JavaScript.

In normal user access information, the value of this property is false or undefined; but if Selenium is used but the window.navigator.webdriver property is not modified, its value is true in the current access request.

Once it is detected that this is an access request from an automated tool, the website may perform specific response measures, such as displaying a verification code, restricting page access, logging, or sending warnings.

2. Modify the User-Agent string

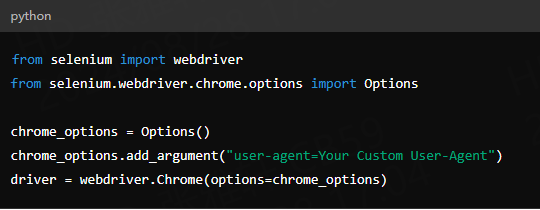

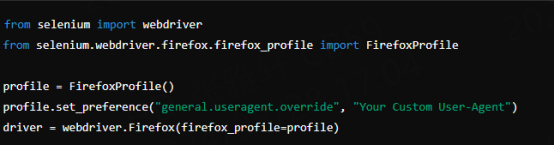

Changing the User-Agent is an effective way to avoid Selenium detection. By default, the Selenium browser may use a User-Agent string that identifies it as an automated tool. You can change the User-Agent to simulate the browser of a real user by following the steps below:

Chrome: Use ChromeOptions to set the User-Agent.

Firefox: Use FirefoxProfile to set the User-Agent.

3. User behavior analysis

It can be clearly seen that the way automated scripts interact with web pages is usually different from that of humans. A major way to detect automated scripts and crawlers on websites is to analyze and determine whether these behaviors are from humans, such as:

Page scrolling and dwell time: Scripts usually spend the least time on the page and scroll the page at a fixed and uniform speed, which is relatively mechanical and rigid; while ordinary human users will stay in different places for different times according to changes in interests when browsing, and will not use a fixed pattern to turn pages.

Mouse clicks: Many automated mouse click programs run at consistent time intervals and appear in the exact same screen position, which is completely different from the random click behavior of humans

Time of visiting the website: Robots may run at non-peak times, such as late at night or early in the morning; some scripts may even work 24 hours a day due to improper program settings.

Request interval: Unlike the sporadic pattern of human activities, automated scripts may make requests at regular, predictable intervals. Especially when they use the same user agent string to send requests at a high frequency, they are not only easily detected by the website, but may also trigger the website's DDos (Distributed Denial of Service) defense mechanism because they occupy too many website resources.

4. Browser fingerprint detection

Browser fingerprint is a unique browser configuration file created by the website after collecting detailed information sent by the user's browser, including browser version, screen resolution, installed fonts, installed plug-ins, etc. Because it is a unique identifier, it is equivalent to the user's online fingerprint, so it is also called browser fingerprint. Since automated scripts usually lack personalized browser configuration, they are easy to match or be similar to the fingerprint patterns of known automated tools or crawlers, so they are easily identified.

If Selenium is detected, how to solve it?

1. Use BrowserScan's robot detection function

First, before running the script or updating the version, you may wish to use a special tool to troubleshoot the specific problem. BrowserScan is a comprehensive and powerful tool with professional robot detection functions. It mainly includes the following four key detection directions, covering most parameters or vulnerabilities that can be detected by the website. In addition to all the common vulnerabilities mentioned in this article, there are dozens of other related detection parameters to help you quickly troubleshoot problems and make timely adjustments:

WebDriver: Check if your browser can be found to be controlled by WebDriver

user-agent: Check if your user-agent string looks like a real user, or if it leaks that you are using a script.

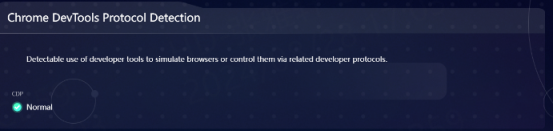

CDP (Chrome DevTools Protocol): BrowserScan can accurately detect situations where developers are emulating/controlling the browser.

Navigator object: Check the Navigator to determine whether there is deception, that is, anomalies that do not match typical user data

2. Use a proxy IP

Using a proxy can hide your real IP address and make your automation script more difficult to track. If the current IP address is blocked by the target website, you can also try to solve it by switching to a different IP address. The following sample code is used to launch the Chrome browser and route all HTTP and HTTPS requests through the specified proxy server.

PIA S5 Proxy has

350 million+ pure residential IPs, covering 200+ countries and regions

Unlimited traffic and terminals, no charge for invalid IPs

Supports location of country, city, ZIP and ISP, HTTP(S)/SOCKS5

New: Traffic packages are online, enjoy 75% discount for a limited time

Why is your Selenium easily detected?

1. The request frequency and behavior repetition rate are too high

A common reason why your Selenium robot may be caught is too high request frequency and repetitive behavior. If your script sends requests too quickly or keeps accessing the same page, it will trigger an alert. There are also actions on the web page that do not match the way humans browse the web, such as always clicking a button, only grabbing data from the same location, etc.

In addition, if your script grabs data 24/7, even if it keeps accessing the website during normal people's rest time, it will easily be identified as a robot. To solve this problem, you can try to disperse the source of requests (that is, use rotating proxies, change User-Agent, etc.), change the pages or order visited, and set the script to run at appropriate time intervals during normal time.

2. Crawl only source code

Many websites use JavaScript to dynamically generate content. If your Selenium crawler only crawls the initial source code and ignores the content rendered by JavaScript, it may miss or behave differently from human users. This requires changing your Selenium settings based on the target web page so that it can execute and interact with JavaScript like a user using a regular browser.

3. Use a single browser and operating system configuration

Using the same browser settings for all crawling tasks can easily make your program discovered. Many websites can detect patterns in browser fingerprints, which include details such as your browser version, operating system, and even screen resolution. To avoid being detected, you should use relevant tools to perform browser fingerprint detection, robot detection, etc. before running the program, and start using automated scripts after modifying the vulnerability. And when running the program, you can change some user agent strings, browser configurations, and other information to simulate access requests from different sources.

4. IP address is blocked

If the above mentioned reasons are excluded, it may be that the IP address you are currently using has too many sessions or sent too many requests, causing it to be detected and blocked by the website. This is a common method used by websites to limit crawling activities; the solution to this problem is to use rotating IP proxies and change the identity information of access requests to bypass the ban.